Apple MacBook Pro 16.2`` Chip M1 Max con CPU de 10 nucleos 64GB de memoria unificada 1TB SSD Grafica GPU de 32 nucleos y Neural Engine de 16 nucleos Pantalla Liquid Retina

Energy-friendly chip can perform powerful artificial-intelligence tasks | MIT News | Massachusetts Institute of Technology

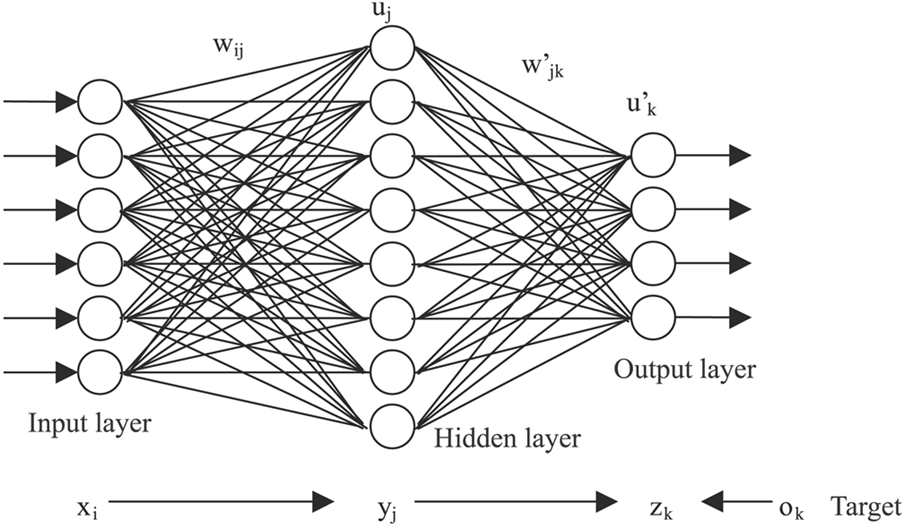

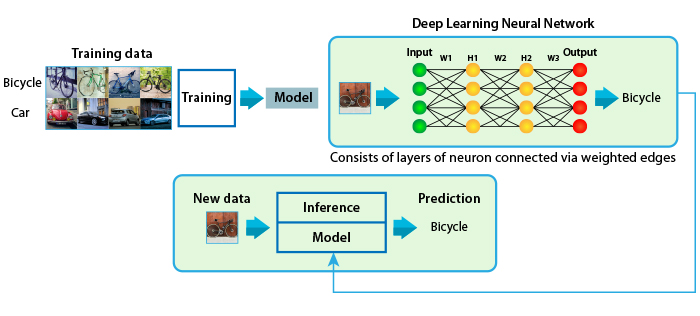

If I'm building a deep learning neural network with a lot of computing power to learn, do I need more memory, CPU or GPU? - Quora

Researchers at the University of Michigan Develop Zeus: A Machine Learning-Based Framework for Optimizing GPU Energy Consumption of Deep Neural Networks DNNs Training - MarkTechPost

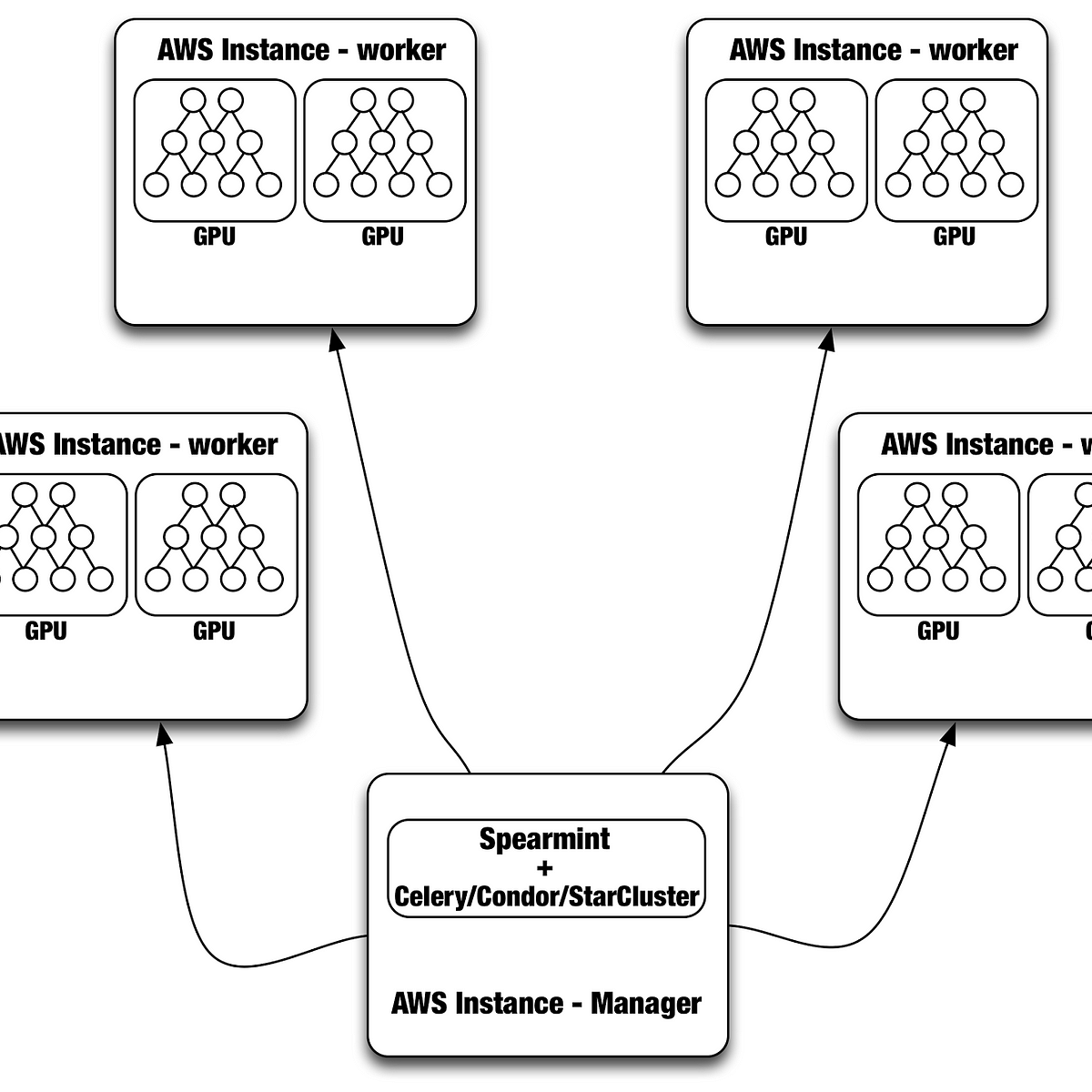

PARsE | Education | GPU Cluster | Efficient mapping of the training of Convolutional Neural Networks to a CUDA-based cluster

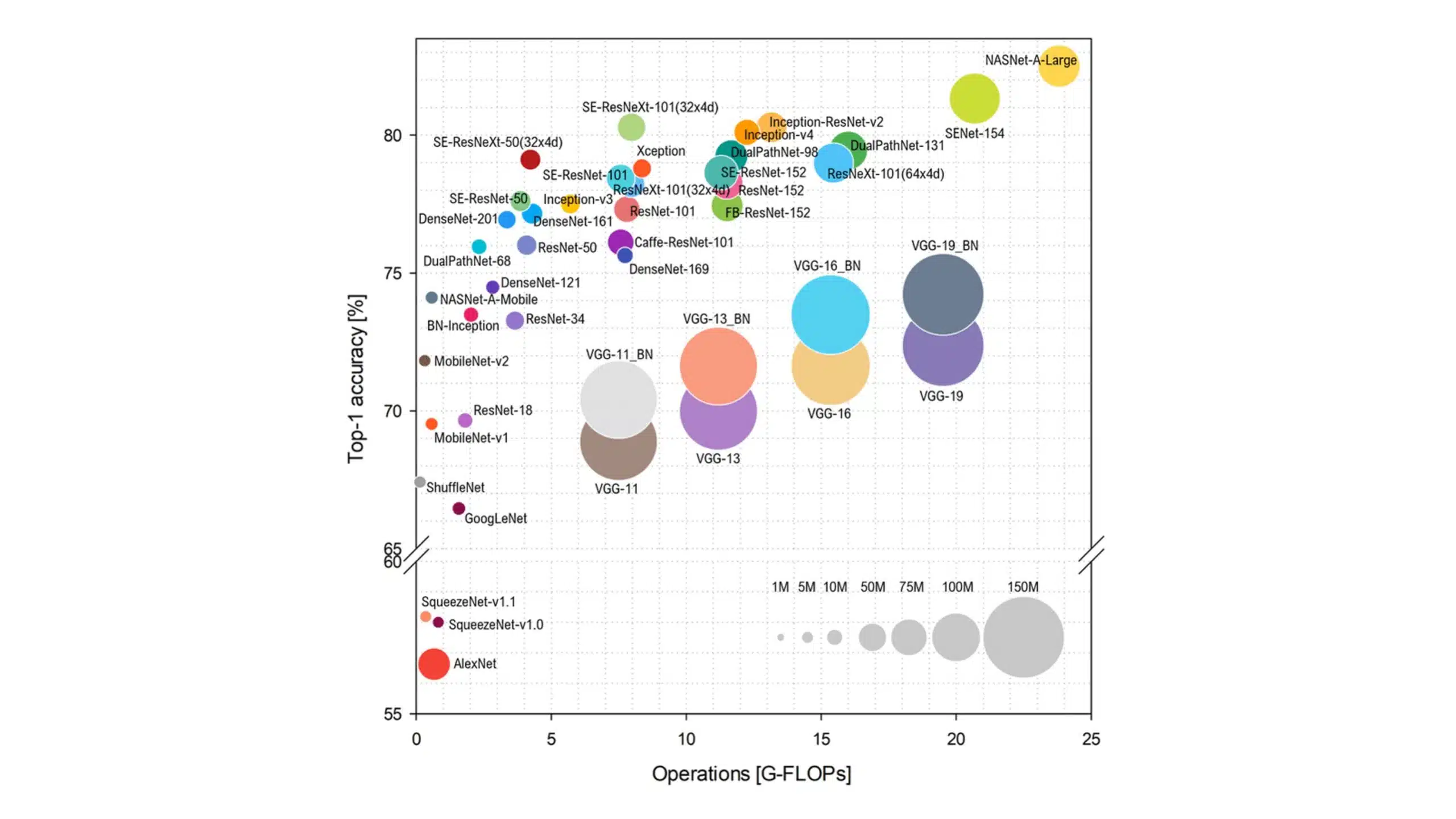

Discovering GPU-friendly Deep Neural Networks with Unified Neural Architecture Search | NVIDIA Technical Blog

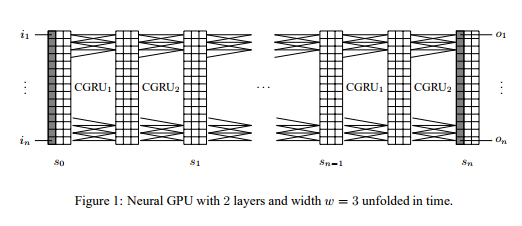

![PDF] Neural GPUs Learn Algorithms | Semantic Scholar PDF] Neural GPUs Learn Algorithms | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/5e4eb58d5b47ac1c73f4cf189497170e75ae6237/4-Figure1-1.png)

/cloudfront-eu-central-1.images.arcpublishing.com/larazon/FAFALPOKDVD2NNRGTBMKWSIONA.jpg)